Let’s be honest. Personalization feels like magic when it works—like your favorite coffee shop knowing your order before you speak. But when it feels creepy? That’s the privacy line being crossed, and users are sprinting in the other direction.

Here’s the deal: you can have both. You can build experiences that feel uniquely tailored without treating user data like an all-you-can-eat buffet. It’s not about locking everything down. It’s about being smart, transparent, and, frankly, respectful. This guide walks you through how to do just that.

The Core Tension: Relevance vs. Respect

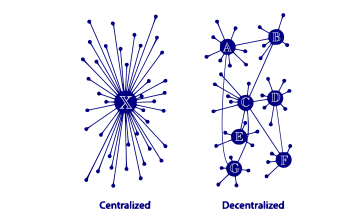

AI thrives on data. The more it knows, the better it predicts. Privacy, on the other hand, is about minimizing data collection and use. Seems like a standoff, right? Well, it doesn’t have to be. The key shift is moving from identifying the individual to understanding the context or segment.

Think of it like this. Instead of tracking “Jane Doe, age 34, who bought running shoes on Tuesday,” you focus on “a user in the ‘marathon trainer’ segment currently browsing for hydration gear.” You get the relevance without the invasive dossier.

First, Lay Your Ethical Foundation

Before you write a single line of code, get your principles straight. This isn’t just compliance; it’s brand trust.

- Data Minimization is Your New Mantra. Only collect what you absolutely need for the personalization goal. Do you really need a birthdate to recommend a blog article? Probably not.

- Transparency Over Perfection. Clearly explain what data you’re using and why. A simple, jargon-free notice beats a cryptic, thousand-word privacy policy any day.

- User Control is Non-Negotiable. Make opt-out, data access, and deletion requests easy to find and execute. Not buried in a labyrinthine menu.

Practical Guide 1: Start with On-Site, Anonymous Signals

You’d be surprised how much you can personalize without ever knowing a user’s name or email. This is your low-hanging, high-privacy fruit.

- Leverage Real-Time Behavior: Page views, time on page, scroll depth, and click patterns. A user reading three articles on “beginner gardening” is signaling interest—you can recommend a fourth without storing a personal identifier.

- Contextualize with Session Data: Device type, time of day, geographic region (city/country level, not precise GPS). Recommending hearty soups on a rainy evening in London? That’s smart, contextual, and anonymous.

- Use Zero-Party Data Wisely: This is data a user intentionally gives you—like preferences selected in a “tell us what you like” onboarding quiz. It’s gold because it’s consented and explicit.

Practical Guide 2: Embrace Federated Learning & On-Device AI

This is where it gets really clever. Instead of sending all user data to a central cloud server to train your AI, you flip the model.

Federated learning means the AI model learns locally on a user’s device. Only the learned insights—the pattern updates, not the raw data—are sent back to improve the main model. It’s like every chef in a network perfecting a recipe at home and only sharing the final tweak, not every ingredient they tried.

On-device processing takes this further. The personalization logic runs entirely on the user’s phone or computer. Their data never leaves their device. Sure, the models might start simpler, but the privacy guarantee is massive. It’s a trade-off worth exploring.

Practical Guide 3: Master the Art of Differential Privacy

This sounds complex, but the concept is beautiful. When you do need to analyze aggregate data, you add a tiny amount of mathematical “noise” to the dataset. This noise makes it statistically impossible to identify any single individual, but the overall trends and patterns remain accurate.

Imagine a town survey asking about income. If you added a small, random number to each person’s response, you couldn’t pinpoint Mr. Smith’s salary, but you’d still know the town’s average income. That’s the essence. Tech giants use this, and with cloud tools, it’s becoming more accessible.

Practical Guide 4: Implement Privacy-Preserving AI Techniques

Here’s a quick look at some technical approaches you can evaluate:

| Technique | What It Does | Privacy Benefit |

| Synthetic Data Generation | AI creates artificial datasets that mimic real user behavior patterns. | Trains models on data that looks real but contains no actual user information. |

| Homomorphic Encryption | Allows computations on encrypted data without decrypting it first. | Data stays encrypted even while being processed, so the system never “sees” raw personal info. |

| Aggregate-Only Analysis | Strictly prohibits querying data for individuals; only allows analysis of large groups. | Forces a system-wide view, preventing “snooping” on specific users. |

Building Trust Through Design

All this tech is pointless if users don’t trust you. Your interface must communicate your ethics. Use clear icons and micro-copy. Instead of “We use cookies,” try “We use local browsing data to show you relevant products—manage your preferences here.” See the difference? The second one explains the value to the user.

And offer granular controls. A simple toggle for “personalized recommendations” that users can flip on or off builds more goodwill than any behind-the-scenes wizardry.

The Path Forward: It’s a Balance, Not a Barrier

Look, implementing AI-powered personalization ethically might feel like walking a tightrope at first. You might have to accept that your product recommendations will be 95% accurate instead of 98%. But that 3%? That’s the cost of user trust. And honestly, that trade-off is a bargain.

The future of personalization isn’t about knowing more in secret. It’s about being smarter with less, and being open about it. It’s about creating a value exchange so clear that users are happy to participate. Start with anonymous signals, explore new techniques like federated learning, and always, always put the user’s choice front and center.

The most powerful personalization, after all, is the kind that makes a user feel understood—not watched.